Data Security Risk

According to The Gartner Market Guide for Artificial Intelligence Trust, Risk and Security Management (AI TRiSM), “two in five organizations had an AI privacy breach or security incident,”, which highlights the importance of applying privacy controls to data that is used for interacting with AI models. And you don't want your organization to become a part of this "two".

Problem

|

Many companies and organizations are keen to explore or have already started utilizing Large Language Models (LLMs) to improve business operations, increase efficiency, and enhance employee productivity, as well as for scientific research. However, they also have concerns about the potential risk of employees unintentionally revealing confidential or sensitive information during these processes. Additionally, while a custom language model may be trained on proprietary data, it’s important to ensure that the model’s output does not contain sensitive information, especially if vendors or suppliers or other external entities have access to it. |

|

LayerP IS THE Solution!

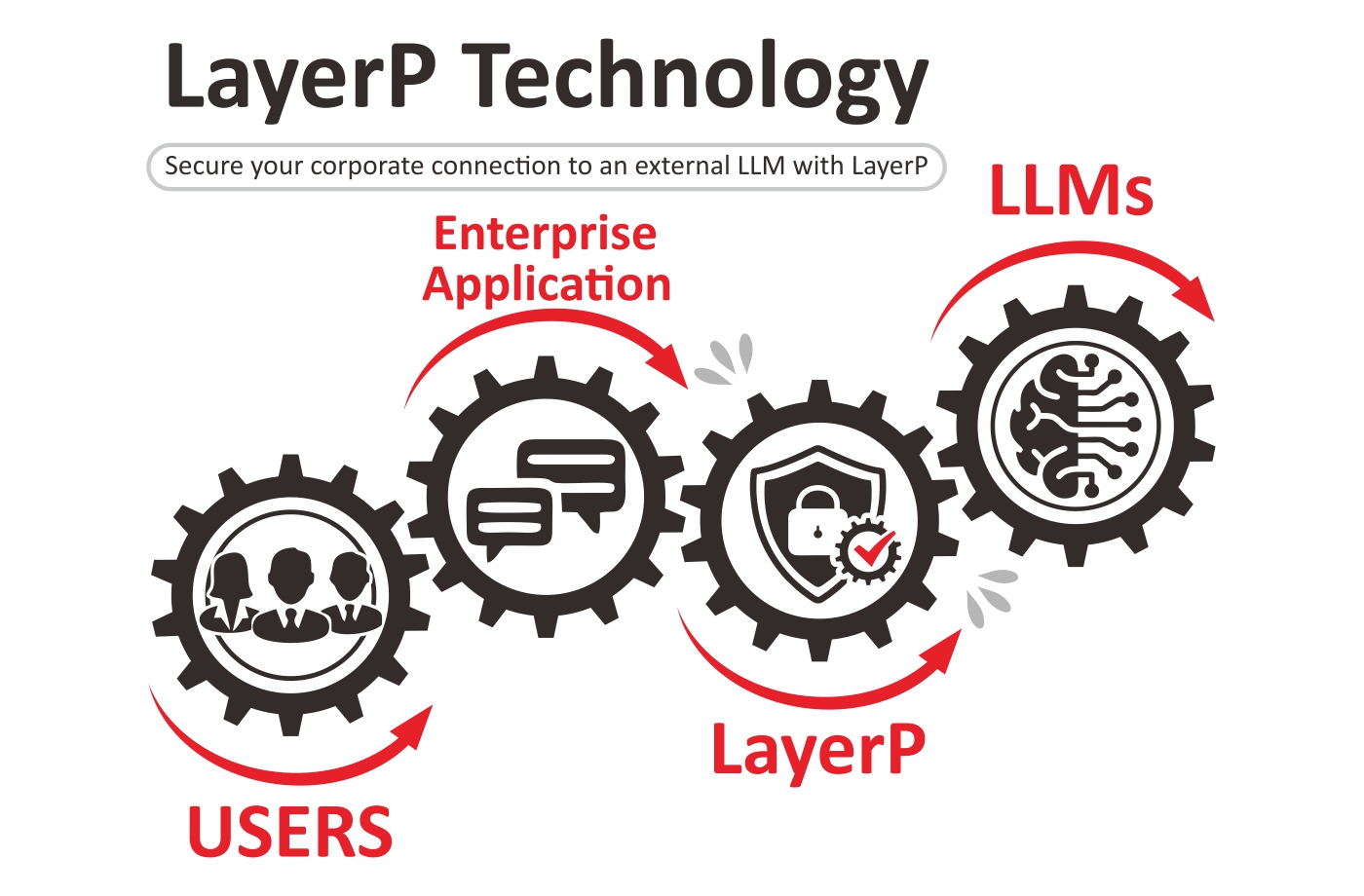

LayerP technology provides a shield that protects organizations' sensitive information and intellectual property from inadvertently leaking to or from Large Language Models (LLMs).

Imagine a security layer positioned between corporate users and an LLM that automatically detects whether an outgoing prompt or response from the model contains confidential or sensitive information. When it does, the layer alerts the user of such a case and stops the prompt from reaching the LLM or the response from reaching the user. This approach effectively prevents accidental leakage of sensitive information. Because it's a thin layer, it introduces minimal delay—taking less than one second—ensuring a seamless user experience with low latency.

Who We Are

LayerP Technology, LLC, based in Greenville, South Carolina, was founded by technologist Don Chunshen Li.